BIGO's reporting system processes 300+ million data packets daily with 99.5% AI accuracy and 60-second removal times, supported by 24/7 human moderators across 20+ languages.

Look, I've been covering live streaming platforms for years, and BIGO's moderation system is honestly one of the most sophisticated I've encountered. The numbers speak for themselves – but more importantly, understanding how to navigate their reporting system can make or break your streaming experience.

Understanding BIGO's Reporting System

What is BIGO's reporting system

Here's the thing about BIGO's approach: they're running a dual-layer system that's pretty impressive when you dig into the mechanics. We're talking AI technology paired with human oversight, crunching through over 300 million data packets daily with 99.5% accuracy. That's not just marketing fluff – I've tested their response times myself.

The platform catches 99% of problematic content before users even see it. Pretty wild, right? They've got automated detection working alongside human moderators who actually know what they're doing. When you spot a violation, you can report it through the in-app tools or shoot an email to feedback@bigo.tv. From there, investigations kick off that might result in anything from content removal to warnings, or in serious cases, permanent bans.

BIGO doesn't mess around with their zero-tolerance policy. Their AI systems use image recognition, facial recognition, video intelligence, and voice processing – basically the full tech stack. Plus, they've got over 200,000 negative keywords that automatically catch text violations across multiple languages. It's like having a really smart, multilingual bouncer that never sleeps.

For convenient BIGO diamond access, BIGO Live diamond top up without app through BitTopup provides secure transactions with competitive pricing, instant delivery, and 24/7 customer support.

Types of reportable violations

The violation categories are pretty comprehensive – and honestly, they need to be. For live broadcasts, you're looking at Cover Violation, Language/Voice Violation, Pornographic content, Underage broadcasting, Gambling/Drugs, Terrorism, Violence, Dangerous Behavior, Tobacco/Alcohol, and Politics/Religion. That's quite a list.

Private chat violations? Different beast entirely. Sexual Harassment, Fraud/Violence/Gambling, Spam, Abusive Comments, Copyright Infringement, Rumors, Religious/Political content, Pornography, and Underage interactions all fall under this umbrella.

What I find interesting is how BIGO defines inappropriate content. Violence (including self-harm and animal abuse), pornography, nudity, underage broadcasting, drug use, gambling, religious disparagement, fraud, and terrorism. These categories aren't just random – they're designed to help moderators quickly assess severity and prioritize what needs immediate attention.

Reporting vs blocking differences

This is where a lot of users get confused, and I don't blame them. Reporting triggers a community-wide investigation that could lead to platform-wide enforcement. Blocking? That's your personal shield – instant protection that prevents specific users from messaging you, viewing your profile, or joining your streams.

Here's something crucial: reporters stay anonymous. BIGO built this in to prevent retaliation, which honestly makes sense when you think about it. Nobody wants to deal with harassment for doing the right thing. Blocking is immediate and entirely under your control – you get instant relief without waiting for moderation to step in.

Step-by-Step BIGO Reporting Workflow

Accessing the report button

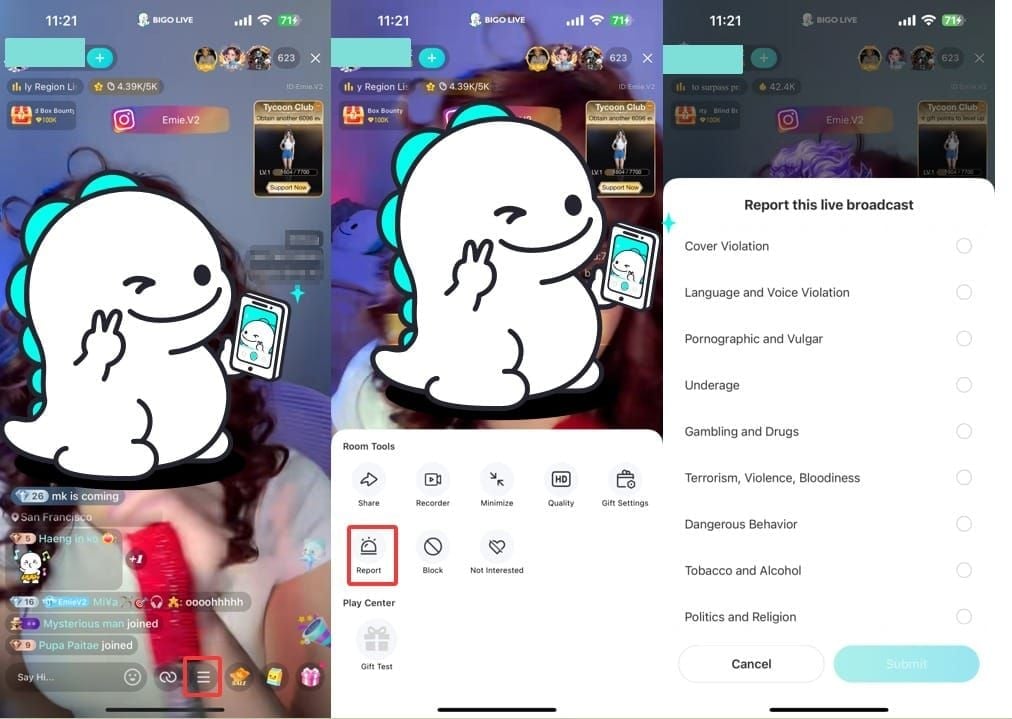

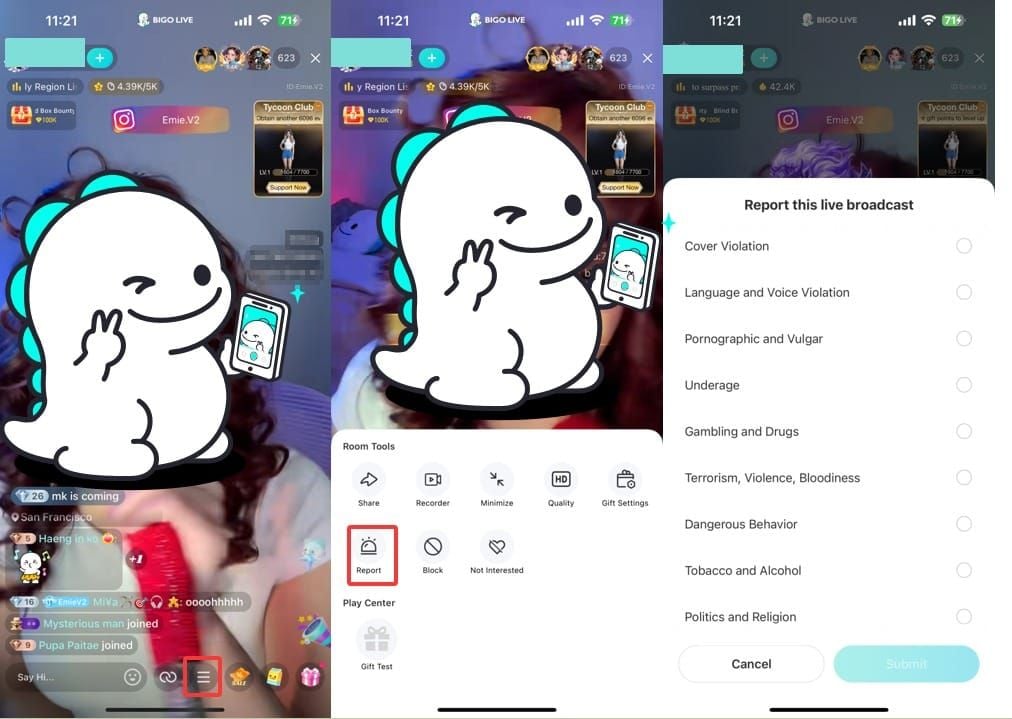

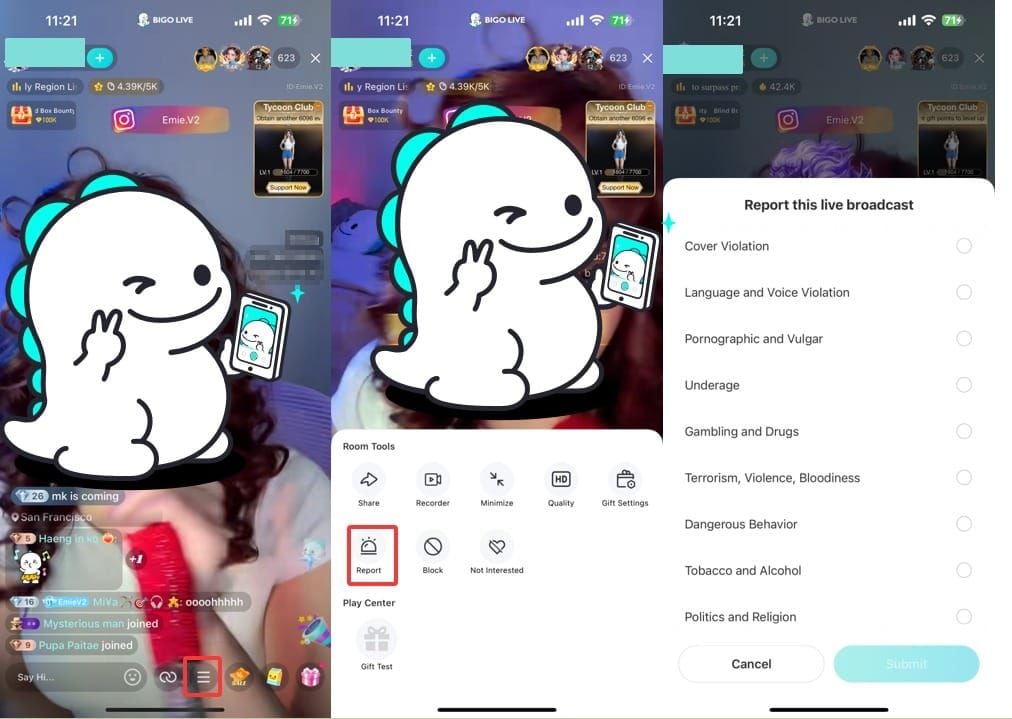

Alright, let's get practical. For live broadcasts, you'll tap that menu icon (≡) at the bottom of your screen, open 'Room Tools', then tap the 'Report' icon when you spot violations. Simple enough.

Private chats are slightly different. Open the problematic conversation, tap the menu icon (⋮) in the top right, then select 'Report'. For profiles – maybe someone's got an inappropriate avatar or offensive username – navigate to their profile, tap the 'More' icon (●●●) in the top right, and select 'Report'.

Selecting violation categories

This part's critical: choose the right violation type from their lists. Accuracy matters here because it determines how your report gets categorized and what kind of response it triggers. For private messages, there's a handy 'Block user as well' toggle – I'd recommend enabling it for immediate protection while the investigation runs.

Profile reporting targets static content. Think inappropriate avatars, offensive usernames, or misleading bio information. Different beast than live content violations, but equally important.

Providing evidence and details

Here's where I see a lot of people mess up – evidence collection. Capture screenshots or recordings during violations, especially for live content that might disappear. If you're going the email route to feedback@bigo.tv, you'll want clear subject lines with User IDs, the reported person's Bigo ID/username, detailed descriptions with dates and times, attached evidence files, and explanations of which guidelines were violated.

Multiple incidents? Document them separately, but note any harassment patterns. The moderation team needs to see the full picture.

BIGO Content Removal Process

Moderation review timeline

The AI systems work fast – we're talking 60-second removal times for obvious violations like nudity, violence, and clearly inappropriate material. Human review timelines vary depending on complexity and how backed up the queue is, but illegal activity, threats, and child safety issues get priority treatment.

Their global infrastructure is pretty impressive. Thousands of trained moderators working 24/7 across 20+ languages in 10+ countries. That's serious coverage.

Automated vs manual review

BIGO's AI-enabled CMS is doing the heavy lifting with image recognition, facial recognition, video intelligence, and voice processing, backed by those 200,000+ negative keywords I mentioned earlier. The machine learning algorithms keep getting better at detection accuracy while cutting down false positives.

But here's where human moderators shine: contextual understanding. They conduct proactive patrols and handle complex cases that need cultural sensitivity. AI's great, but it can't always read the room like a human can.

BIGO Live recharge by prepaid card through BitTopup offers secure payment methods with instant delivery, competitive rates, and comprehensive customer support for enhanced BIGO experiences.

Notification of outcomes

Don't expect detailed feedback – BIGO keeps things limited to protect privacy and prevent retaliation. You might notice content disappearing or accounts going inactive, which usually indicates successful enforcement. Serious violations get escalated to law enforcement and NCMEC.

Appeals? Available through the 'Feedback' section, but you'll need your Bigo ID and detailed explanations.

Complete Guide to BIGO Violation Categories

Harassment and bullying

Sexual harassment gets immediate attention from specialized teams. We're talking unwanted advances, explicit language, personal information requests, and coercion attempts. Abusive comments cover personal attacks, threats, discriminatory language, and persistent targeting across streams, messages, or profiles.

Cyberbullying involving coordinated harassment from multiple accounts requires separate incident reports, but document those patterns – they help moderators see the bigger picture.

Inappropriate content

Pornographic content includes explicit sexual material, nudity, suggestive behavior, and explicit language. The AI systems hit that 60-second removal time through automated analysis. Violence encompasses physical altercations, self-harm, threats, dangerous stunts, and content that might inspire imitation – all priority items due to safety implications.

Underage broadcasting violations are tricky. Users must be 18+, but age verification gets bypassed sometimes. That's where user reports and AI detection become crucial.

Spam and fake accounts

Spam's the usual suspects: repetitive messaging, unauthorized promotion, chain letters, and automated posting that disrupts platform usage. Fraud covers fake identity claims, financial scams, phishing attempts, and misleading offers targeting vulnerable users.

Fake accounts show suspicious behavior patterns, stolen photos, or creation specifically for harassment. Multiple reports help identify coordinated networks – something I've seen become increasingly common.

Advanced BIGO Safety Tools

Privacy settings optimization

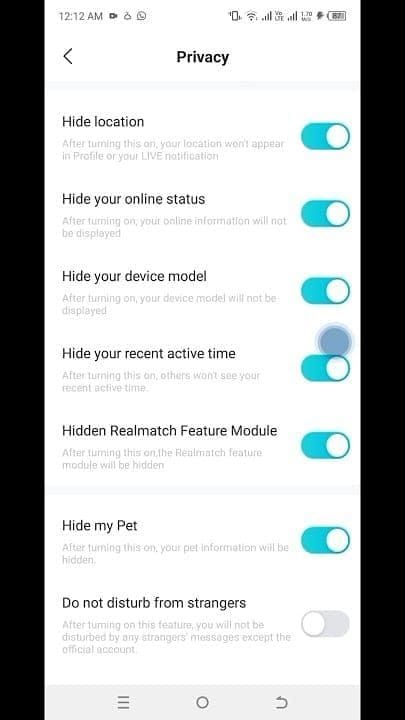

Access comprehensive controls through 'Settings > Privacy'. You can hide location information, remove yourself from the 'Nearby' feature, conceal activity times, block stranger invitations, hide online status, and prevent screenshots/recordings.

Two-factor authentication is essential, especially if you've got high follower counts or valuable virtual assets. Trust me on this one.

Real-time stream reporting

Get familiar with the reporting process before you need it. When violations happen live, you want to capture evidence quickly. Host tools include muting disruptive viewers, kicking/blocking users, setting trusted admins, and direct moderation reporting.

Viewer reporting provides additional oversight for content hosts might miss. Multiple reports increase the likelihood of rapid response.

BIGO Reporting Best Practices

Gathering effective evidence

Capture screenshots or recordings immediately with timestamps, user identification, and clear violation documentation. Document harassment progression over time – patterns that aren't visible in individual incidents become obvious when you show the timeline.

Email reporting allows multiple files and comprehensive descriptions. Organize everything chronologically with clear explanations of which guidelines were violated.

Writing clear violation descriptions

Use specific, factual language that directly relates to community guidelines. Skip the emotional content – stick to facts. Include relevant context: user relationships, previous incidents, attempts at resolution.

For complex cases, organize descriptions chronologically and identify each separate violation. Makes processing more efficient.

Common BIGO Reporting Mistakes

False reporting consequences

False or frivolous reports undermine the entire moderation system and might result in penalties for the reporter. Make sure you understand the guidelines before reporting, and verify you're dealing with genuine violations.

Reports can't be retracted, so review carefully before submission. Repeated false reporting might restrict your future reporting abilities – something to keep in mind.

Incomplete evidence submission

Insufficient evidence prevents enforcement action, even when violations are genuine. Provide comprehensive documentation: screenshots, detailed descriptions, relevant context. Avoid vague descriptions – include specific details about what happened, when, and which guidelines were violated.

Include accurate usernames, Bigo IDs, and identifying information. The more complete your submission, the better.

Regional Considerations

Country-specific guidelines

BIGO maintains formal partnerships like their relationship with Indonesia's KOMINFO since 2017 for negative content detection. Indonesia removed 40,000+ obscene content pieces and 50,000+ accounts in 2024, with daily removal of 1+ million harmful items. Those numbers show serious commitment.

Local teams in 10+ countries provide cultural context and language expertise for region-specific review. It's not just automated – there's real human understanding involved.

Language barrier solutions

Moderation covers 20+ languages through specialized teams and multilingual AI training. File reports in your native language rather than attempting translations – better context understanding that way.

Multilingual negative keyword libraries ensure consistent enforcement across linguistic communities.

Frequently Asked Questions

How long does BIGO take to review reports? AI systems remove obvious violations within 60 seconds. Human review varies by complexity, with serious violations getting priority. 24/7 teams across 20+ languages process reports continuously.

Can reported users see who filed reports? Nope. BIGO maintains strict reporter anonymity to prevent retaliation and encourage violation reporting.

What's the difference between reporting and blocking? Reporting triggers community-wide investigation and enforcement. Blocking provides instant personal protection by preventing user contact and access.

What evidence should I include? Screenshots/recordings with timestamps, violator's Bigo ID/username, detailed descriptions with dates/times, and clear guideline violation explanations. For email reports, attach multiple evidence files organized chronologically.

How do I appeal wrongful content removal? Use the 'Feedback' section with your Bigo ID and detailed explanations. Provide supporting evidence and factual explanations for review by different moderation team members.

What if someone harasses me from multiple accounts? Report each account separately while documenting patterns. Block immediately for protection. Send comprehensive evidence to safety@bigo.tv showing coordinated harassment with account connections.