Chamet's AI filtering system, updated January 3, 2025, scans streams in 3-15 minutes using automated detection. 60% of bans stem from technical glitches, not actual violations. Understanding AI triggers and appeal procedures protects your account from unwarranted strikes.

Understanding Chamet's AI Moderation System

After Google removed Chamet from Play Store on August 27, 2023 for violating user-generated content policies, the platform intensified moderation. The app operated 2020-2023 before this enforcement action prompted major changes.

Chamet now uses 24/7 moderation plus AI-powered filtering detecting violations in real-time. AI scans complete within 3-15 minutes of broadcast start. Review takes ~30 seconds to flag problematic content.

Buy Chamet Diamonds Cheap through BitTopup for secure transactions while focusing on compliance.

2025 Changes: Manual to AI Review

January 3, 2025 updates banned explicit modifications and tightened borderline content enforcement. The shift from manual to AI-driven detection changed flagging speed and frequency.

Before 2025, human moderators reviewed reports with delays. New AI scans every active stream simultaneously, eliminating lag but increasing false positives—algorithms struggle with contextual nuances humans naturally understand.

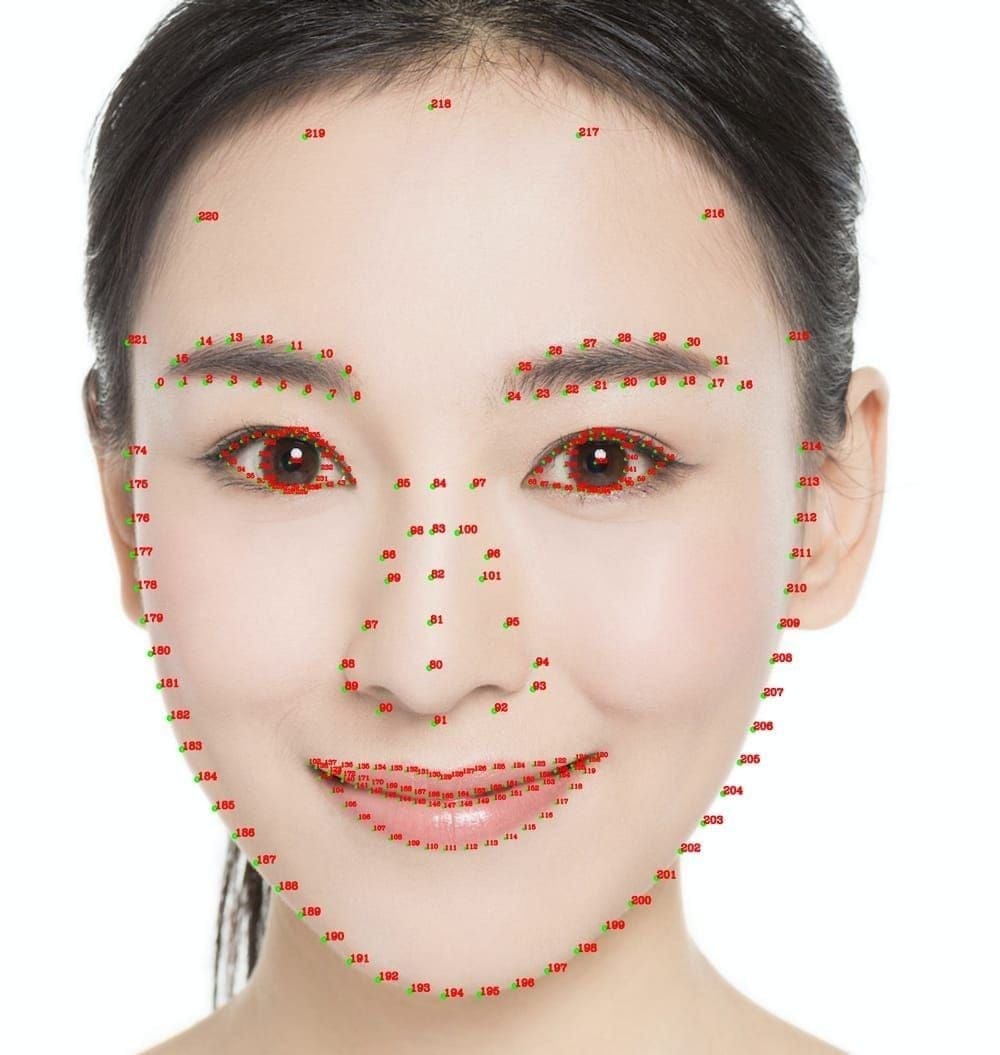

Real-Time Detection Algorithm

AI filtering operates through continuous frame analysis during broadcasts. The system examines visual elements, audio patterns, behavioral indicators against prohibited content datasets. When detecting potential violations, it generates warnings and may interrupt streams.

Detection checkpoints: initial scan at stream start, continuous monitoring during broadcast, post-stream analysis. Each applies different sensitivity thresholds, with live monitoring prioritizing speed over accuracy. This aggressive approach causes normal streams to trigger warnings.

Why Automated Scanning Exists

Play Store removal created urgent pressure to demonstrate improved moderation. Automated scanning provides scalable enforcement manual teams can't match across global time zones and languages.

18+ age verification and end-to-end encryption require proactive monitoring to prevent misuse. Since encryption limits user reports, AI serves as primary enforcement. Violations result in:

First offense: temporary suspension

Repeat violations: permanent suspension

Severe cases: potential legal action

Why Normal Streams Get Flagged

67.6% of reviews cite explicit content concerns, yet 60% of bans stem from technical glitches. AI can't perfectly distinguish innocent content from violations.

Streamers encounter warnings despite appropriate clothing, professional conduct, clean environments. Limited platform explanation about triggers creates frustration.

False Positive Statistics

Technical glitches cause 60% of erroneous enforcement. Temporary bans last 24-48 hours, causing income and engagement loss. Appeals with screenshots achieve 90% success rate, but initial disruption damages careers.

Lighting Misinterpretation

Poor lighting creates common false positives. Harsh shadows across face/body create patterns AI misreads as inappropriate exposure. Backlighting silhouettes may trigger warnings—algorithms struggle distinguishing clothing boundaries in low-contrast conditions.

Flickering lights or rapid illumination changes confuse detection. AI analyzes frame-by-frame, and sudden brightness variations register as obscured content attempts. Consistent, even lighting from multiple angles minimizes misreads.

Camera Angle Triggers

Low-angle shots emphasizing body proportions or high-angle perspectives increase false positives. AI training datasets contain limited examples of unconventional angles in compliant contexts.

Close-ups cropping heads or focusing on torsos trigger warnings—algorithms associate these compositions with inappropriate patterns. Wide shots including beds, couches, or private-space furniture raise flags even when fully clothed.

Background Environment Issues

Accidental background content causes significant false positives. Posters, artwork, decorative items may contain imagery AI interprets as violations. Classical art reproductions or fashion photography trigger warnings if featuring partial nudity or suggestive poses.

Reflective surfaces—mirrors, windows, glossy furniture—create complications. AI detects and analyzes reflections as separate content, potentially flagging distorted images. Roommates/family accidentally entering frame trigger warnings if misinterpreted.

Common Innocent Triggers

Personal presentation choices frequently cause unwarranted warnings. AI can't understand context, cultural norms, or individual expression. Streamers from diverse backgrounds face difficulties when normal attire/gestures differ from AI training parameters.

Clothing Colors and Patterns

Skin-tone clothing creates highest false positive risk—beige, tan, nude colors may be interpreted as exposed skin. Streamers wearing these should expect increased scrutiny.

Tight-fitting or form-revealing clothing triggers warnings based on silhouette analysis, regardless of coverage. Patterns with abstract shapes, particularly circular/curved designs, get misinterpreted at low resolution or during motion.

Body Language Misreads

Natural movements register as violations. Stretching, adjusting clothing, touching face/hair can be misinterpreted as suggestive behavior. Dance, exercise, enthusiastic gestures may trigger warnings if matching prohibited content patterns.

Cultural gestures innocent in original context flag as inappropriate. Hand positions, postures, facial expressions carry different meanings across cultures, but AI applies universal standards. Non-Western streamers face disproportionate false positive risk.

Accidental Background Detection

AI scans beyond obvious items. Book covers, magazine pages, product packaging trigger warnings if containing flagged imagery. Text on clothing, posters, objects gets scanned for prohibited language.

Pets moving through frame occasionally cause warnings if movements create unusual patterns. Shadows from off-camera objects create shapes AI misinterprets.

Language Filter Errors

Audio analysis for prohibited words/phrases causes frequent false positives when accents make innocent words sound like banned terms. Non-native speakers face challenges—speech patterns trigger warnings despite appropriate language.

Background conversations, TV audio, music trigger language warnings. AI can't distinguish streamer speech from ambient audio, flagging uncontrollable environmental sounds.

Warning Strike System

Chamet enforces policies through graduated penalties escalating with repeated violations. Account suspension is primary enforcement—temporary suspensions warn, permanent suspensions end careers. Warning strikes track violations over time, potentially expiring after compliant behavior periods. Platform provides limited transparency about strike duration and removal.

Warning Accumulation Timeline

First violations: 24-48 hour temporary suspension plus warning strike. Second violations within undefined timeframe: longer suspensions, additional strikes. Exact escalation timeline unclear—Chamet doesn't specify strike duration or permanent suspension threshold.

Repeated violations: permanent suspension. For false positives, this means career-ending consequences for technical errors beyond control.

Impact on Account Standing

Strikes likely influence algorithmic visibility and recommendations. Poor-standing accounts receive reduced discovery promotion, limiting growth. This invisible penalty compounds suspension income loss, creating long-term damage.

Viewer trust suffers from frequent suspensions, even false positives. Audiences assume violations occurred, damaging reputation. Regular viewers migrate to competitors during suspensions—permanent audience loss.

Warnings vs. Suspensions vs. Bans

Warnings: Initial enforcement notifying of violations without restricting access. Educational but lack specific trigger information.

Temporary suspensions: 24-48 hour streaming restrictions creating immediate financial impact. Use this period to review policies, adjust setup, prepare appeals.

Permanent bans: Final enforcement completely removing access. Irreversible career-ending action. Appeal process offers only recourse.

How to Appeal False Warnings

Appeal immediately upon receiving unwarranted warnings/suspensions. 90% success rate for appeals with screenshots proves platform overturns erroneous decisions when presented evidence.

Documentation is critical. Maintain broadcast records including screenshots of setup, clothing, background. Without documentation, appeals rely solely on platform review of flagged content.

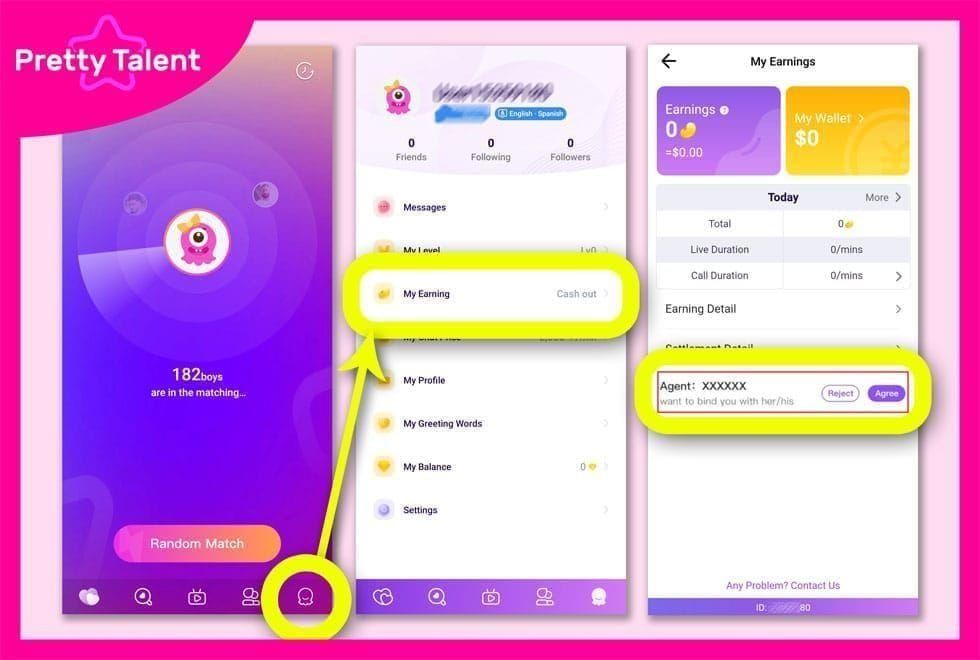

Accessing Appeal Dashboard

When receiving warning/suspension, immediately access appeal function in Chamet app. Look for Appeal, Contest Decision, or similar in account settings or notification messages.

Appeal requires:

User ID (8-12 digits)

Date and time of flagged stream

Description of why enforcement was erroneous

Evidence for Successful Appeals

Screenshots proving compliance form foundation. Capture images showing full setup: camera angle, background, clothing, potential triggers. Time-stamped screenshots corresponding to flagged stream provide strongest evidence.

Written explanations should identify likely false positive trigger and explain compliance. Example: Warning at 3:47 PM when adjusting beige cardigan. AI likely misinterpreted skin-tone fabric as exposed skin. Screenshots show full clothing throughout stream.

Video clips from flagged segments provide irrefutable evidence. Use brief, clear clips directly addressing alleged violations.

Response Times and Success Rates

Expect responses within 24-72 hours for straightforward cases. Complex appeals may extend to a week+.

90% success rate for appeals with screenshots demonstrates platform corrects false positives when shown evidence. Appeal every unwarranted action rather than accepting erroneous penalties.

If Appeal Denied

Email chamet.feedback@gmail.com with user ID, appeal reference number, additional evidence. Direct contact may reach different reviewers for fresh evaluation.

Maintain professional, factual communication: My appeal for [date/time] suspension was denied. User ID: [number]. Providing additional evidence demonstrating policy compliance. Attach documentation and request specific violation explanation.

If multiple appeals fail, persistent false positives may indicate fundamental incompatibility between your style and AI parameters. Adjust approach based on identified triggers or reconsider platform fit.

Prevention: Stream Setup Best Practices

Proactive prevention beats reactive appeals. Optimizing environments and presentation minimizes false positives while maintaining authentic style. Many adjustments improve stream quality generally.

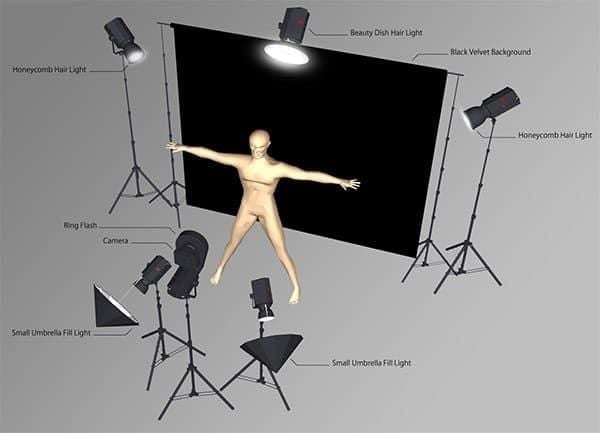

Optimal Lighting Configuration

Three-point lighting provides most reliable illumination:

Key light: 45° to one camera side

Fill light: 45° opposite side, lower intensity

Back light: behind and above, separating you from background

Eliminates harsh shadows, provides even illumination helping AI distinguish clothing, skin, background.

Avoid backlighting silhouetting your form. Cover windows behind you or balance with strong front lighting. Color temperature consistency prevents shifts making skin-tone clothing appear as exposed skin.

Adjustable lighting allows real-time optimization. Dimmable LEDs provide flexibility for different clothing colors. Monitor stream preview—what you see is what AI analyzes.

Camera Positioning and Framing

Position cameras at eye level or slightly above. Standard framing provides clear face/upper body view without unusual perspectives confusing AI.

Frame mid-chest up, ensuring head isn't cropped with adequate space above. Establishes you as primary subject while providing context. Extreme close-ups or wide shots making you small increase misinterpretation risk.

Maintain consistent framing throughout streams. Sudden changes trigger AI re-analysis, creating additional false detection opportunities. Adjust during natural breaks, not mid-activity.

Background Safety Checklist

Conduct thorough audits before each stream:

Remove/cover artwork, posters with human figures, particularly partial nudity

Eliminate reflective surfaces from view—mirrors, glass frames, windows, glossy furniture

Use plain, neutral backgrounds providing clear contrast with your appearance

Check reflections from camera perspective

Simple backgrounds improve aesthetics by keeping focus on you.

Clothing Recommendations

Avoid skin-tone colors (beige, tan, nude) misinterpreted as exposed skin. Choose colors clearly contrasting with skin tone—darker or brighter hues AI easily distinguishes.

Select clothing with clear, defined edges rather than form-fitting/flowing garments creating ambiguous silhouettes. Layering provides additional visual definition helping AI assess coverage.

Minimize clothing adjustments during streams—these movements frequently trigger warnings. Adjust off-camera or during breaks.

Advanced Tips: AI Behavior Patterns

Experienced streamers develop intuitive understanding through repeated exposure. AI undergoes continuous training and updates—sensitivity and triggers evolve. What worked last month may not prevent warnings today.

Peak Sensitivity Times

AI exhibits increased sensitivity following policy updates or high-profile enforcement. January 3, 2025 tweaks likely triggered heightened scrutiny as updated algorithms deployed. Exercise extra caution weeks following policy changes—false positive rates spike during adjustment periods.

System training periods correlate with increased false positives as AI processes new data. Community reports of widespread warnings may indicate ongoing updates. During these periods, use conservative content choices and meticulous optimization.

Regional Moderation Differences

Content policies and enforcement vary across regions due to different legal requirements and cultural standards. Familiarize yourself with region-specific guidelines.

AI may apply different sensitivity thresholds based on registered location or audience geography. With international audiences, assume strictest standards apply.

Monitoring Account Health

Infer standing through: warning frequency, suspension history, platform communications about compliance. Maintain personal records of enforcement actions and appeals to track patterns.

Sudden changes in visibility, recommendations, or growth may indicate algorithmic penalties. Consistent compliance over extended periods should gradually restore full treatment.

Stream Safety Settings

Enable 18+ age verification and two-factor authentication. End-to-end encryption protects communications but doesn't prevent AI scanning of public streams.

Review all privacy and safety settings. Configure options aligning with content type and audience. Check for new settings in app updates.

Protecting Your Career Long-Term

Sustainable success requires balancing expression with compliance. AI moderation is permanent—adaptation is essential. Develop systematic approaches to prevention, documentation, appeals.

Diversify to reduce single-platform dependence. Maintain presence on alternatives and build direct audience relationships through social media. Ensures enforcement actions can't completely eliminate your career.

Chamet Top Up Online through BitTopup provides secure transactions and competitive pricing for reliable support.

Building Reputation Score

Consistent compliance over extended periods likely improves standing with AI and human reviewers. Long adherence histories may receive more lenient treatment or faster appeal approvals.

Engage positively with community features, respond professionally to viewers, maintain regular schedules. Demonstrates serious commitment, potentially influencing borderline case evaluations.

Staying Updated on Changes

Monitor official Chamet communications for policy announcements. January 3, 2025 tweaks demonstrate rules evolve. Subscribe to newsletters, follow verified social media, regularly review terms and guidelines.

Community forums provide early warnings about emerging AI patterns and enforcement trends. Multiple streamers reporting similar triggers helps you avoid issues proactively.

Emergency Response Plan

Develop standard procedure before warnings occur:

Immediately document flagged stream with screenshots

Prepare appeal materials

Contact support through appropriate channels

Communicate with audience about situation

Maintain backup contact methods for core audience to notify of suspensions and return dates. Preserves relationships and demonstrates professionalism. Consider creating content on alternatives during suspensions.

BitTopup Support

Successful streaming requires reliable platform resource access and responsive support. BitTopup provides comprehensive Chamet services: secure diamond purchases, account support, expert guidance for platform challenges. Competitive pricing, fast delivery, excellent customer service.

Whether restoring resources after suspension, accessing exclusive features, or ensuring consistent diamond availability, BitTopup's wide coverage and high ratings make it the preferred choice for serious streamers.

FAQ

Why does Chamet flag my stream when fully clothed? AI scans in 3-15 minutes and may misinterpret skin-tone clothing, lighting, or camera angles as violations. 60% of bans result from technical glitches, not actual violations.

How long does a warning last? Temporary bans: 24-48 hours for first violations. Strikes remain on record for unspecified duration. Repeated violations lead to permanent suspension.

What happens after 3 strikes? Chamet doesn't specify exact strike threshold for permanent suspension. Violations result in temporary suspension initially, repeated violations in permanent suspension. Severe violations may trigger legal action regardless of count.

How do I appeal false decisions? Email chamet.feedback@gmail.com with user ID (8-12 digits), flagged stream date/time, supporting evidence like screenshots. Appeals with proof achieve 90% success rate, review takes ~30 seconds.

Can lighting trigger warnings? Yes. Harsh shadows, backlighting, flickering lights cause AI misinterpretation. Use consistent, even three-point lighting to minimize misreads.

What content is sensitive in 2025? Prohibited: pornographic content, harassment, discrimination, hate speech. January 3, 2025 updates banned explicit modifications, tightened enforcement. Requires 18+ verification, enforces strict prohibitions on explicit/adult content.

Protect your Chamet career with reliable support! Visit BitTopup for secure services, instant diamonds, exclusive resources. Competitive pricing, fast delivery, excellent customer service serious streamers depend on.